Many software experts have long predicted the demise of the Apache Hive data warehouse system, but today it is still used to manage large volumes of data. Likewise, many Apache Hive features have been integrated into subsequent systems. It may therefore be beneficial to take a closer look at Hive and its main applications.

What is Apache Hive?¶

Apache Hive is a scalable extension of the Apache server application and Apache Hadoop storage framework. In Hadoop architectures, complex calculations are divided into small processes and then distributed in parallel across computing clusters composed of nodes using clustering. Large volumes of data can therefore be treated, even from standard server and computer architectures. Here, Apache Hive acts as an integrated, open source querying and analytics system for your data warehouse. Hive allows you to query, analyze, and aggregate data through the HiveQL database language, similar to SQL. Using Hive, large groups of users can also access Hadoop data.

Hive uses syntax comparable to SQL:1999 to structure programs, applications and databases or to integrate scripts. Before Hive, you had to know Java programming and programming procedures to query data with Hadoop. Hive allows you to Easily translate queries into database system format. These can for example be MapReduce tasks. With Hive, it is also possible to integrate other SQL-based applications with the Hadoop framework. Since SQL is widely used, Hive, which acts as an extension of Hadoop, also makes it easier for uninitiated people to work with databases and large data volumes.

How does Hive work?

Before Apache Hive came to complement the Hadoop framework, the latter’s ecosystem was based on the MapReduce framework developed by Google. For Hadoop 1, it was still implemented directly in the framework as an autonomous engine dedicated to the management, monitoring and control of computing resources and processes. To query Hadoop files, it was therefore necessary to know Java well.

Below you will find the main functions of Hadoop related to the use and management of large volumes of data:

- data aggregation

- the questioning

- analysis

The operating principle of Hive is simple: thanks to its interface similar to that of SQL, Hadoop file queries and analyzes are easily transposed into MapReduce, Spark or Tez tasks by HiveQL. Hive organizes Hadoop framework data into a table format compatible with HDFS. HDFS is the acronym for “Hadoop Distributed File System” (“distributed file system”). It queries data in a targeted manner through clusters and nodes specific to the Hadoop system. Standard functions in the form of filters, aggregations and joins are also available.

Hive and Schema-on-read¶

Unlike relational databases which operate according to the SoW (Schema-on-write) principle, Hive is based on the SoR principle (Schema-on-read, literally “schema on reading”) : the data is first stored as is in the Hadoop framework. They are not saved in a predefined schema. At the time of a Hive query, the data is then adapted into a schema that meets your needs. Its main advantage lies in cloud computing, as it offers more scalability and flexibility, as well as faster loading times for databases distributed across different clusters.

How to work with data in Hive?

To query and analyze data with Hive, Apache Hive tables should be used using a read access pattern. With Hive, you can organize and sort the data in these tables in the form small detailed units or large global units. These Hive tables are divided into “buckets”, which are actually blocks of data. You can use the HiveQL database language, similar to SQL, to access the data you are interested in. It is possible, among other actions, to rewrite Hive tables, join them (or append them), and serialize them into databases. Each Hive table additionally has its own HDFS directory.

To keep control over your database, opt for the Linux hosting offer offered by IONOS and benefit from an SSL certificate and DDoS protection, as well as secure servers based in Germany.

Hive: main functions¶

Searching and analyzing large volumes and data sets stored as Hadoop files in a Hadoop framework, are among the main functions of Hive. Hive also supports translating HiveQL queries into MapReduce, Sparks, and Tez jobs.

Other notable features of Hive include the following:

- Storing metadata in relational database management systems

- Using compressed data in Hadoop systems

- Using UDFs (User Defined Functions) for personalized data processing and exploration

- Support for different storage types such as RCFile, Text or HBase

- Using MapReduce and supporting ETL processes

What is HiveQL?¶

Hive is often described as being « SQL-like. » This is due to the HiveQL database language; while it is based on SQL, it is not fully compliant with standards such as SQL-92. In a way, HiveQL can therefore also be considered as a SQL or MySQL “dialect”. Although they are very similar, several essential aspects make it possible to establish a distinction between these two languages. Thus, many SQL functions are not supported by HiveQL, particularly with regard to transactions; the latter’s support for subqueries is also limited, while it offers better scalability and more performance in the Hadoop framework thanks to its extensions, such as « Multitable Inserts ». The Apache Hive compiler allows you to translate HiveQL queries into MapReduce, Tez and Spark.

Use dedicated servers equipped with powerful Intel or AMD processors and rent a Managed server from IONOS if you do not want to invest in an IT service.

Apache Hive: data protection¶

By integrating Apache Hive with Hadoop systems, you can also take advantage of the Kerberos authentication service. You can thus proceed reliably for mutual and reliable authentication and verification between the server and the users. Additionally, because HDFS sets permissions for new Hive files, you are responsible for user and group permissions. Another key security aspect is Hive’s restoration of critical workloads in the event of an emergency.

What are the benefits of Apache Hive?¶

Hive offers a large number of useful functions, especially if you want to work with large volumes of data in the context of Cloud computing or Big Data as a Service (BDaaS), literally “big data as a service”:

- Ad hoc requests

- Data analysis

- Creating tables and partitions

- Support for logical, relational and arithmetic links

- Monitoring and verifying transactions

- End of day reports

- Loading query results into HDFS directories

- Transferring table data to local directories

The main advantages of Hive are therefore:

- It provides qualitative insights into large volumes of data, for example for data mining and machine learning

- It optimizes the scalability, profitability and extensibility of large Hadoop frameworks

- It allows you to segment user circles by analyzing click flows

- It does not require any in-depth knowledge of Java programming procedures, thanks to HiveQL

- It provides a competitive advantage by promoting responsiveness and faster, scalable performance

- It can store data volumes of up to hundreds of petabytes, and up to 100,000 data requests per hour, even without high-end infrastructure

- It guarantees better use of resources, and therefore faster calculation and loading times depending on workloads thanks to its virtualization capacity

- It helps ensure good, fail-safe data protection with better disaster recovery options and Kerberos authentication service

- It helps speed up data insertion by eliminating the need to transpose data into internal data base formats (Hive can read and analyze data even without manual format changes)

- It works according to an open source principle

What are the disadvantages of Apache Hive?¶

The main drawback of Apache Hive is related to the many systems developed subsequently which have similar or even better performance. Specialists consider that Hive is less and less relevant for the management and use of databases.

Below are other disadvantages associated with Hive:

- No access to real-time data

- Complex processing and updating of datasets through the Hadoop framework with MapReduce

- High latency which makes it slower than its competitors today

Quick overview of the Hive architecture¶

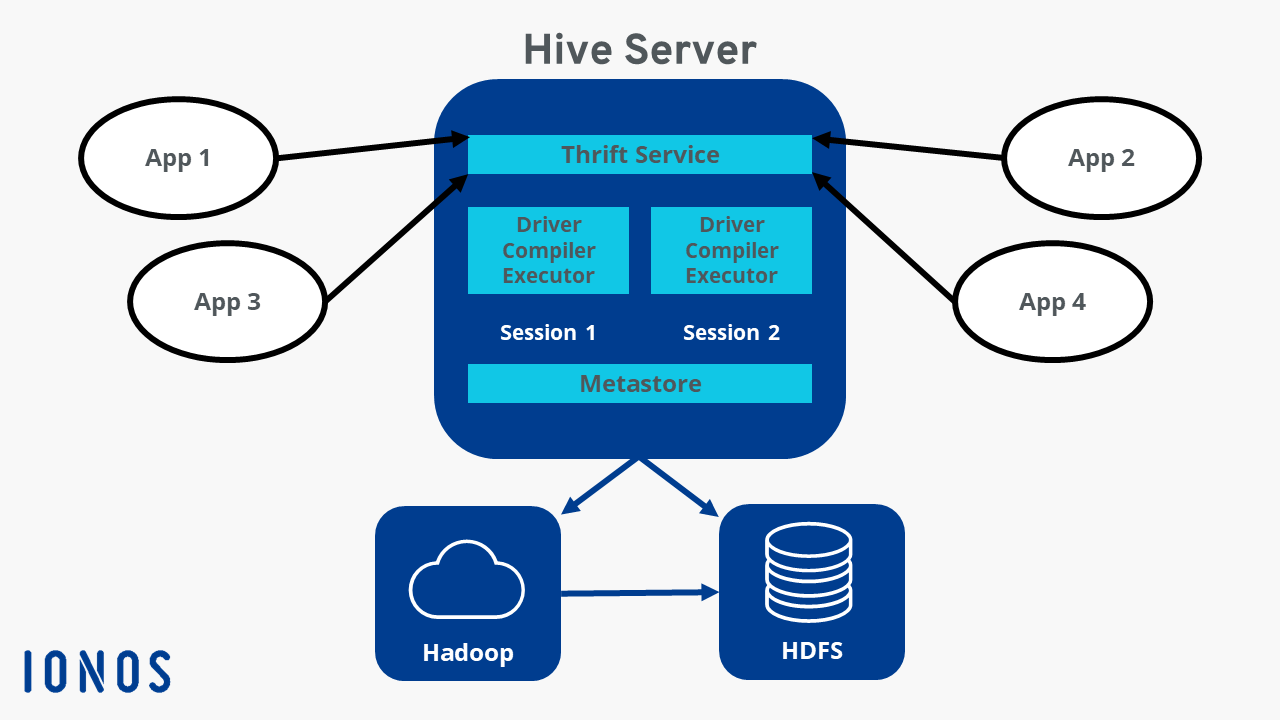

The components below are some of the most important in the Hive architecture:

- Metastore : This is the central storage location for Hive. It contains, in RDBMS (relational database management system) format, all relevant data and information on, for example, table definitions, schemas and directory locations, as well as partition metadata

- Pilot : it receives the commands sent by HiveQL and processes them using the components Compiler (« compiler », to collect information), Optimizer (« optimizer », to determine the best execution method) and Executor (« executor », to implement the task)

- Command line and user interface : this is the interface for external users

- Thrift Server : it allows external clients to communicate with Hive and JDBC (Java DataBase Connectivity) and ODBC (Open Database Connectivity) type protocols to interact and exchange via the network

What is the story of the creation of Apache Hive?¶

Apache Hive aimed to help users with little knowledge of SQL work with data volumes of up to several petabytes. Hive was developed in 2007 by its founders, Joydeep Sen Sharma and Ashish Thusoo, while they were working at Facebook on the social network’s Hadoop framework, one of the largest in the world (at several hundred petabytes). In 2008, Facebook decided to put the Hive project in the hands of the open source community. Version 1.0 was released to the public in February 2015.